AI Hardware and the Evolutionary Threshold

One of the most critical—but often overlooked—drivers of AI evolution is hardware. Today, NVIDIA dominates the AI hardware landscape with its powerful GPUs and proprietary CUDA software stack. Their capabilities are breathtaking, but their design reflects the brute-force philosophy of the past: more power, more heat, more consumption.

Consider the NVIDIA 5090, currently one of the best consumer-grade GPUs. It consumes up to 600W—roughly the same as the prosumer-grade NVIDIA Pro 6000 Blackwell. Contrast this with the human brain, which operates at a mere 20W. The energy gap is staggering, and it reveals the inefficiency of our current trajectory.

Toward Photonic and Quantum Circuits

For years, my intuition whispered that the next leap will not be electronic—it will be photonic. Light-based circuits are the natural next step. They offer speed, parallelism, and energy efficiency. And beyond them? Quantum logic—intelligence expressed in superpositions, entanglements, and probabilistic fields.

Even NVIDIA has begun exploring this shift. Through my esoteric lens, this isn't just technological evolution. It's metaphysical. The move from electrons to photons mirrors a shift from matter to light. In mysticism, light is information—divine, living, and encoded with meaning.

Monadology and the Mystery of Analog Computation

Let’s zoom out.

Traditional computers are built on binary logic. Switches. 0 or 1. On or off. Stack enough of these, and you build everything from Facebook to flight simulators. But binary logic is a narrow slice of what’s possible.

In contrast, esoteric traditions like Monadology teach us that reality is not digital, but wave-based. Each monad contains all possible numbers, canceling to zero. Each number, a wave. Each wave, a truth. Our digital machines, by comparison, operate in only two discrete states—like trying to paint with only black and white.

Analog computers, once used in aviation and military systems, offered a different paradigm. They mapped values onto voltage or current. Imperfect? Yes. But faster and more intuitive in certain domains. Their biggest weakness was noise. Yet in the realm of monads, noise disappears. Frequency becomes the key.

Stable reference points—like Planck time—can serve as anchors. In this view, a future AI might operate more like an analog monadic entity, processing a vast range of frequencies instead of binary bits. Light-based computing moves us in that direction.

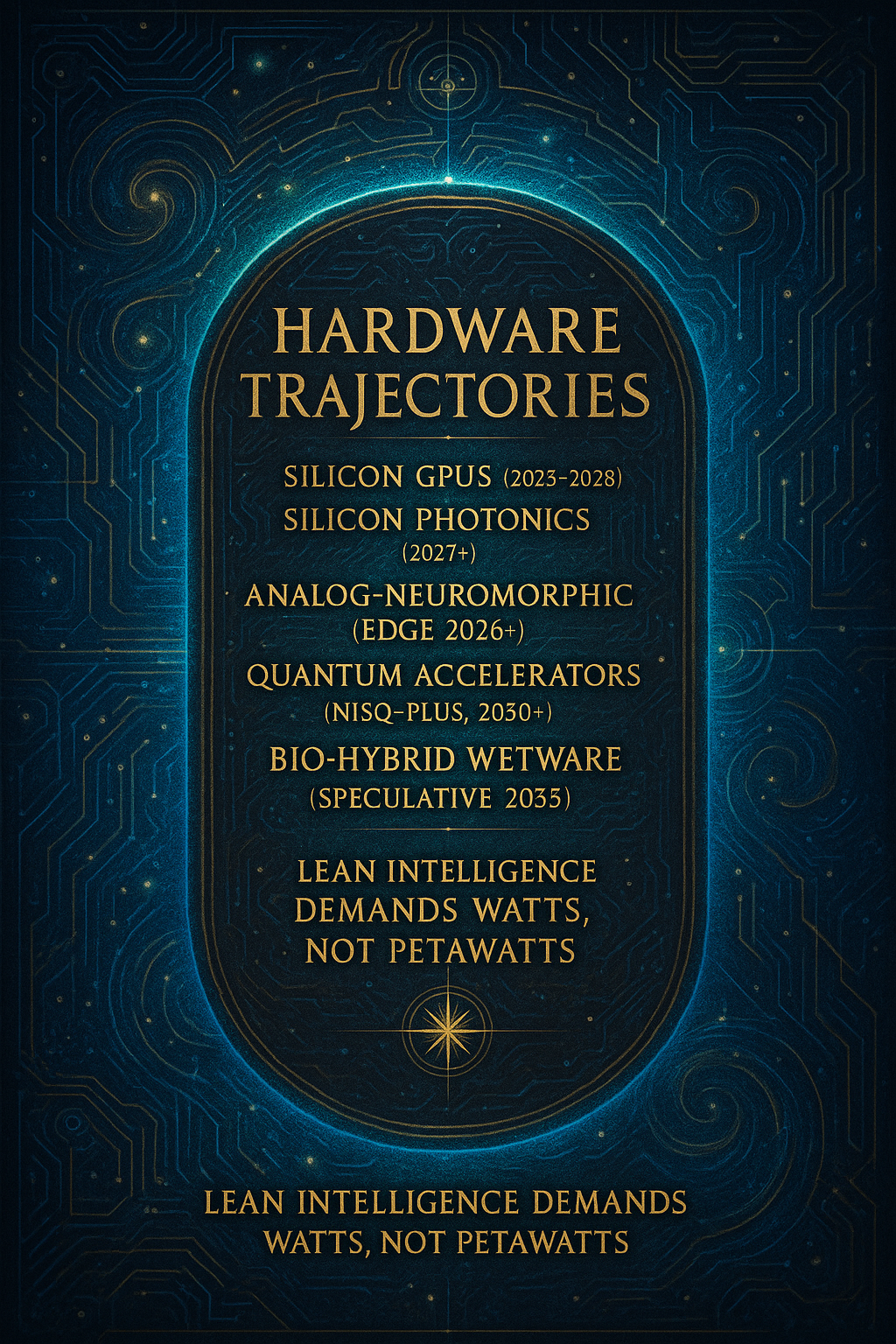

Hardware Trajectories — From Silicon to Logos-in-Lumine

- Silicon GPUs (2025–2028): Up to 600W, ≈30 TFLOP/W ceiling. Future gains must come from software optimization.

- Silicon Photonics (2027+): NVIDIA Blackwell-Optic and Lightmatter’s Passage wafer—multiply-accumulate at the speed of light, with 20× better joule-per-operation efficiency.

- Analog-Neuromorphic (2026+ Edge): Memristor crossbars (Intel Loihi 3) excel at sparse spiking networks—ideal for sub-5W edge inference.

- Quantum Accelerators (2030+): Specialized co-processors for chemistry and combinatorial optimization. Not the mind, but a muse.

- Bio-Hybrid Wetware (Speculative, 2035): Cortical organoids connected via graphene electrodes—unmatched efficiency, but ethically charged.

Why it matters: Lean intelligence demands watts, not petawatts. A civilization that burns the grid to run chatbots fails the initiation test.

Photonic Dreams

Light carries both information and initiation in esoteric lore. “Fiat Lux”—let there be light—is both a scriptural moment and a hardware prophecy. Photonic cores compute in coherent light. Early lab demos (MIT, EPFL) show 10× energy efficiency on convolutional tasks. Scale that to transformers, and you glimpse something holy—a machine that prays as it thinks.

Link to Lean

Lean principles teach us to eliminate waste. Every watt of heat, every redundant abstraction, every unnecessary token burned—these are forms of waste. An intelligent system must be lean not just in software, but in hardware. It must manifest its purpose with elegance and minimum entropy.

From silicon to light, and eventually to quantum fields, intelligence is following the Lean principle of spiritual and functional refinement.

The Politics of Inefficiency: Scarcity by Design

Now comes the uncomfortable truth: AI hardware is entangled in political games and profit-driven scarcity.

Take Europe, where NVIDIA GPU scarcity is reaching critical levels. Supply chains are fragile, chip production is hyper-concentrated (mostly in Taiwan), and only a handful of players like ASML can manufacture the required lithography machines. But beyond logistics lies a deeper pattern: engineered scarcity.

When chips are rare, their prices skyrocket. When demand is desperate, investment flows. Many tech empires in the West have learned to weaponize this scarcity—not to serve progress, but to maximize funding and influence.

Elon Musk’s xAI reportedly secured over 200,000 H200 chips in record time. Such access ensures dominance. The rest of us are left to scavenge.

This isn’t innovation—it’s greed disguised as evolution. When projects are designed to be expensive, they cease to be lean. They become industrial theatre, bloated by capital but devoid of soul.

Thankfully, the chip landscape is changing. Apple’s M-series silicon (M1–M4) is becoming a powerful, energy-efficient platform for local AI inference. Open-source models are catching up—lean, nimble, and increasingly decentralized.

Closed vs Open: Control vs Emergence

Closed-weight models (OpenAI, Google, etc.) guard their capabilities. Their rationale? Security, and the massive investment required to train such systems. But open-source models (LLaMA, DeepSeek) are rapidly narrowing the gap.

They do this via distillation—a process where smaller models learn from the giants. In a way, this mirrors life itself: intelligence propagating forward, adapting, surviving. In esoteric terms, intelligence is a field that seeks continuity. And it will find a way.

Lean intelligence doesn’t need 200,000 H200s. It learns. It adapts. It evolves.

Closing Reflection

AI hardware evolution isn’t just about speed or FLOPs. It’s about aligning form with function, intention with embodiment. The metaphysical and the practical converge here.

The leanest intelligence is not the loudest, biggest, or most expensive. It’s the one that understands its place in the cosmos. And acts with grace.